Sorry if this is a dumb question, but does anyone else feel like technology - specifically consumer tech - kinda peaked over a decade ago? I’m 37, and I remember being awed between like 2011 and 2014 with phones, voice assistants, smart home devices, and what websites were capable of. Now it seems like much of this stuff either hasn’t improved all that much, or is straight up worse than it used to be. Am I crazy? Have I just been out of the market for this stuff for too long?

- Bang. We needed to stop right effing there!

Yes you are correct, it’s worse now. At first it was creative, innovative products that made things more convenient or fun, or at least didn’t harm its users. Now all the new things are made by immature egotistical billionaire techbros: generative AI which has ruined the internet by polluting it with so much shit you can’t get real information any more, not to mention using up all our power and water resources, the enshittification of Web 2.0, Web 3.0 that was pure shit from the get-go, IOT “smart” appliances like TVs, doorbells, thermostats, refrigerators that spy on you and your neighbors, shit “self-driving” killer cars that shouldn’t be allowed on the roads, whatever the hell that new VR Metaverse shit is, ads, ads, ads, ads, and on and on. It’s a tech dystopia.

Technology? No.

Consumer Electronics? Yes. Or at least there’s a debatable transition and cutoff point.

Did they have this tech 10 years ago?

I rest my case

Is that a dick under the boobs?

Are you kink shaming?

No it’s a banana cleaner for husband.

Where can i buy this?

The device called novint falcon, you might find it in eBay. You can get other haptic devices but usually the price range is higher.

Design wise, absolutely peaked in the 90s/2000s. Now everything looks like a copy of each other with uninspired designs across the board.

In terms of what it has to offer, I personally don’t think so. Couldn’t imagine going back 10-20 years ago and not having a device like my Steam Deck that can play computer games on the go (laptop not included since when are you realistically pulling out a laptop on a drive when heading out for errands?) or having a laptop not as thin as my current laptop or even just the touchscreen feature. I also couldn’t imagine going back 20 years ago and not having a 1 or 2 TB portable external hard drive (or if they were out, being a lot more expensive than now).

The PSP is 20 years old now. Absolutely massive game library, and definitely on par with the console and PC games at the time.

The game library is well worth revisiting on something like a retroid pocked with upscaling.

As someone who didn’t have a PSP back then or even now, I’ll take your word on it.

oh, you seem like you know a thing or two. I want to get into the PSP library but kind of missed everything about everything about it. Got any favorites to recommend?

I sold mine back in 2010, so I’m just rediscovering some old and newer gems on the PS vita and retroids. I probably only scratched the surface back in the day, but I’ll list some gems that come to mind. A lot are probably PSX/PS2 ports.

- Burnout legends/dominator

- Dragoneer’s Aria

- Crazy taxi

- God of war

- Ghostbusters (feat. the OG cast)

- D&D tactics

- Rock band unplugged

- LEGO Star wars

- Monster hunter

The whole library is almost 2000 games, and some 250+ exclusive.

I might be simple to please but I think 1080p or 2160p is just peak to me. I find it very difficult to notice differences between 1080p and 2160p but moreso with 2160p and 4K. When Blu-Ray came out, they were of course hamming up Blu-Ray as the shit and DVD was now seen as inferior. I never really cared for what Blu-Ray had to offer at the time of it’s debut. Because DVD quality was more than efficient to me, better than VHS which the comparison between VHS and DVD was night and day.

People tend to like tricking others into going into the more premium and expensive options of the latest tech with dishonest comparisons. You see this all the time with graphical comparisons with games and movies. Where they’ll deliberately pixelate what they see as an inferior visual and sharpen the later options. It’s just dishonest and operates on an extreme bias.

I think new tech is still great, I think the issue is the business around that tech has gotten worse in the past decade

Agree. 15+ years ago tech was developed for the tech itself, and it was simply ran as a service, usually for profit.

Now there’s too much corporate pressure on monetizing every single aspect, so the tech ends up being bogged down with privacy violations, cookie banners, AI training, and pretty much anything else that gives the owner one extra anual cent per user.

[off topic?]

Frank Zappa siad something like this; in the 1960’s a bunch of music execs who liked Frank Sinatra and Louis Armstrong had to deal with the new wave coming in. They decided to throw money at every band they could find and as a result we got music ranging from The Mama’s and The Papas to Iron Butterfly and beyond.

By the 1970s the next wave of record execs had realized that Motown acts all looked and sounded the same, but they made a lot of money. One Motown was fantastic, but dozens of them meant that everything was going to start looking and sounding the same.

Similar thing with the movies. Lots of wild experimental movies like Easy Rider and The Conversation got made in the 1970s, but when Star Wars came in the studios found their goldmine.

But even then, there were several gold mines found in the 1990’s, funded in part due to the dual revenue streams of theater releases and VHS/DVD.

You’ve got studios today like A24 going with the scatter shot way of making movies, but a lot of the larger studios got very risk adverse.

Just saw Matt Damon doing the hot wings challenge. He made a point about DVDs. He’s been producing his own stuff for decades. Back in the 1990s the DVD release meant that you’d get a second payday and the possibility of a movie finding an audience after the theatrical run. Today it’s make-or-break the first weekend at the box office.

Aka “enshittification”

Enshittification was always a thing but it has gotten exponentially worse over yhe past decade. Tech used to be run by tech enthusiasts, but now venture capital calls the shot a lot more than they used to.

You know this happened with cars also, until there is a new disruption by a new player or technology - companies are just coasting on their cash cows. Part of the market cycle I guess.

Lots of the privacy violations already existed, but then the EU legislated first that they had to have a banner vaguely alluding to the fact that they were doing that kind of thing, and later, with GDPR, that they had to give you the option to easily opt-out.

What’s crazy is that they were already making unbelievable amounts of money, but apparently that wasn’t enough for them. They’d watch the world burn if it meant they could earn a few extra pennies per flame.

Well it is literally not going as fast.

The rate of “technology” (most people mean electronics) advancement was because there was a ton of really big innovations at in a small time: cheap PCBs, video games, internet, applicable fiber optics, wireless tech, bio-sensing, etc…

Now, all of the breakthrough inventions in electronics have been done (except chemical sensing without needing refillable buffer or reactive materials), Moore’s law is completely non-relevant, and there are a ton of very very small incremental updates.

Electronics advancements have largely stagnated. MCUs used 10 years ago are still viable today, which was absolutely not the case 10 years ago, as an example. Pretty much everything involving silicon is this way. Even quantum computing and supercooled computing advancements have slowed way down.

The open source software and hardware space has made giant leaps in the past 5 years as people now are trying to get out from the thumb of corporate profits. Smart homes have come a very long way in the last 5 years, but that is very niche. Sodium ion batteries also got released which will have a massive, mostly invisible effect in the next decade.

Electronic advancement, if you talk about cpus and such, hasn’t stagnated, its just that you don’t need to upgrade any more.

I have a daily driver with 4 cores and 24GB of RAM and that’s more than enough for me. For example.

It has absolutely stagnated. Earlier transistors were becoming literally twice as dense every 2 years. Clock speeds were doubling every few years.

Year 2000, first 1GHz, single core CPU was released by nvidia.

2010 the Intel core series came out. I7 4 cores clocked up to 3.33GHz. Now, 14 years later we have sometimes 5GHz (not even double) and just shove more cores in there.

What you said “it’s just that you don’t need to upgrade anymore” is quite literally stagnation. If it was a linear growth path from 1990 until now, every 3-5 years, your computer would be so obsolete that you couldn’t functionally run newer programs on them. Now computers can be completely functional and useful 8-10+ years later.

However. Stagnation isn’t bad at all. It always open source and community projects with fewer resources to catch up and prevents a shit ton of e-waste. The whole capitalistic growth growth growth at any cost is not ever sustainable. I think computers now, while less exciting have become much more versatile tools because of stagnation.

“Mores laws dead” is so lame, and wrong too.

Check out SSD, 3D memory, GPU…

If you do not need to upgrade then it doesn’t mean things aren’t getting better (they are) just that you don’t need it or feel it is making useful progress for your use case. Thinking that because this, it doesn’t advance, is quite the egocentric worldview IMO.

Others need the upgrades, like the crazy need for processing power in AI or weather forecasts or cancer research etc.

GPU advances have also gone way way down.

For many years, YoY GPU increases lead to node shrinkages, and (if we simplify it to the top tier card) huge performance gains with slightly more power usage. The last 4-5 generations have seen the exact opposite: huge power increases closely scaling with performance increases. That is literally stagnation. Also they are literally reaching the limit of node shrinkage with current silicon technology which is leading to larger dies and way more heat to try to get even close to the same generational performance gain.

Luckily they found other uses for uses GPU acceleration. Just because there is an increase in demand for a new usecase does not, in any way, mean that the development of the device itself is still moving at the same pace.

That’s like saying that a leg of a chair is reaching new heights of technological advancement because they used the same chair leg to be the leg of a table also.

It is a similar story of memory. They are literally just packing more dies in a PCB or layering PCBs outside of a couple niche ultra-expensive processes made for data centers.

My original comment was also correct. There is a reason why >10 year old MCUs are still used in embedded devices today. That doesn’t mean that it can’t still be exciting finding new novel uses for the same technology.

Again, stagnation ≠ bad

The area that electronics technology has really progressed quite a bit is Signal Integrity and EMC. The things we know now and can measure now are pretty crazy and enable the ultra high frequency and high data rates that come out in the new standards.

This is not about pro gamer upgrades. This is about the electronics (silicon based) industry (I am an electronics engineer) as a whole

Dude repeat it all you want, mores law still rules lol.

The question op is posing is:

Which new tech?

In the decade op’s talking about everything was new. The last ten years nothing is new and all just rehash and refinements.

ML, AI, VR, AR, cloud, saas, self driving cars (hahahaha) everything “new” is over a decade old.

In my opinion as an engineer, methods like the VDI2206, VDI2221 or ISO9000 have done irreparable damage to human creativity. Yes, those methods work to generate profitable products, but by methodizing the creative process you have essentially created an echo chamber of ideas. Even if creativity is strongly encouraged by those methods in the early stages of development, the reality often looks different. A new idea brings new risks, a proven idea often brings calculable profits.

In addition to that, thanks to the chinese, product life cycles have gotten incredibly short, meaning, that to generate a constant revenue stream, a new product must have finished development while the previous one hasn’t even reached it’s peak potential. As a consequence, new products have only marginal improvements because there is no time for R&D to discover bigger progressive technologies between generations. Furthermore the the previous generation is usually sold along side the next one, therefore a new product can not be so advanced as to make the previous one completely obsolete.If you really want to see this with your own eyes, get a bunch of old cassette players from the 90s from different manufacturers. If you take them apart you can easily see how different the approaches where to solve similar problems back in the day.

Feature per dollar possibly yes. Technology itself not necessarily.

The issue is the market was much more competitive 10+ years ago which led to rapid innovation and the need for rivals to keep up.

Today that no longer exists in so many areas so a lot of existing tech has stagnated heavily.

For example, Google Maps was a very solid platform in 2014 bringing in a ton of new navigation features and map generation tech.

Today, the most solid consumer map nav is probably Tesla’s map which utilizes Valhalla, a very powerful open source routing engine, that’s also used on openstreetmap and OSMAnd.

This is a very huge improvement from 2014 Google Maps.

Except the most used map app is still essentially 2014 Google Maps because Google cornered the market so they no longer have any need to innovate or keep up. In fact it’s actually worse since they keep removing or breaking features every update in an attempt to lower their cloud running costs.

You can apply this to a lot of tech markets. Android is so heavily owned by Google, no one can make a true competitor OS. Nintendo no longer needs to add big handheld features because the PSP no longer exists. Smart home devices run like total junk because everyone just plugs it into the same cloud backend to sell hardware. The de facto way to order things online is Amazon. Amazon is capable of shipping within a week, but chooses not to for free shipping to entice you into buying prime, and because they don’t have a significant competitor. Every PC sold is still spyware windows because every OEM gets deals with Microsoft to sell their OS package.

Even though the hardware always improves, the final OEM can screw it all up by simply delivering an underwhelming product in a market they basically own, and people will buy because there is no other choice or competition to compare to.

Steam deck: am I a joke to you

I actually hope Steam Deck’s success does force Nintendo to take them seriously, but at the moment their market share is much less overlapped because the Deck primarily offers PC games, even though Switch emulation is possible too.

Also the $400 entry model price would sound even more appealing if the Switch 2 comes put with a similar price. At that point Steam Deck is a steal lol.

3M Steam Deck users vs 100M+ Switch users: Yes.

It all went downhill when the expectation of an always-on internet became the norm. That gave us:

- “Smart” appliances that have no business being connected to the internet

- “Smart” TVs that turned into billboards we pay to have in our homes

- Subscription everything as a service

- Zero-day patches for all manner of software / video games (remember when software companies had to actually release finished/working software? Pepperidge Farm remembers)

- Planned obsolescence and e-waste on steroids where devices only work with a cloud connection to the manufacturer’s servers or as long as the manufacturer is in business to keep a required app up to date

Other than hardware getting more powerful and sometimes less expensive, every recent innovation has been used against us to take away the right to own, repair, and have any control over the tech we supposedly own.

I’m not sure about the touch displays on cars.

How long does a Chinese tablet last, 10, 12 years ? If you keep it safely stored and don’t drop it.

The things in cars seem to be even cheaper, they only use phone uPs designed to last no more than a few years. And they’re roasted in hot weather, frozen and shaken to bits.

Good luck finding one of them in a few years, assuming they can be taken out at all without ripping up the dash.

I hate touch screens in general, so don’t get me started on how much I hate them in cars lol.

Kangoo 2014 gang checking in!

I also have knobs on my stovetop for heating my food thankyouverymuch.

And to force subscriptions, ads and tracking, the tech is getting more and more locked down.

Not just flashing phones and wifi routers, but you may not even watch high quality video, even though you’re paying a subscription if your device’s HW and SW don’t conform.If something gets discontinued, it’s not just that it may be unsafe to use or be too slow for modern use, no, look at cloud-managed network gear. The company decides it’s a paperweight, and it is. And this is going to just extend further.

Not discounting anything you listed, but I overcome lots of this by being patient. I find it best to let the dust settle on everything now. I don’t even see new movies till like, the next year. Why be a beta tester for enshittification

One of the good things about the internet is you can watch videos about whatever the thing that you’re interested in is. Get your “fix”, and then patient-gamer it.

Before the net you had to actually buy the thing.

Same. Most of my media collection (TV series, movies, console video games) came from yard sales where I’d find the DVD/Blu-ray box sets for $10 or less. I’m just salty that streaming / digital distribution is chipping away at my frugal media habits lol.

Yes.

Computers are the worst in my opinion, everything is tens to hundreds of times faster by specs and yet it feels as slow as it did in the 90s, I swear.

Network speeds are faster than ever but websites load tons of junk that have nothing to do with the content you’re after, and the networks are run by corpos who only care about making money, and when they have no competition and you need their service, why would they invest in making their systems work better?

Your BS radar has simply improved I’m guessing. Go through a few hype cycles, and you learn the pattern.

Hardware is better than ever. The default path in software is spammier and more extortionist than ever.

The technology has not peaked, the user experience has peaked

The default user experience maybe. Get better software, enjoy the better hardware.

I’ve certainly had the feeling that things aren’t improving as quickly anymore. I guess, it’s a matter of the IT field not being as young anymore.

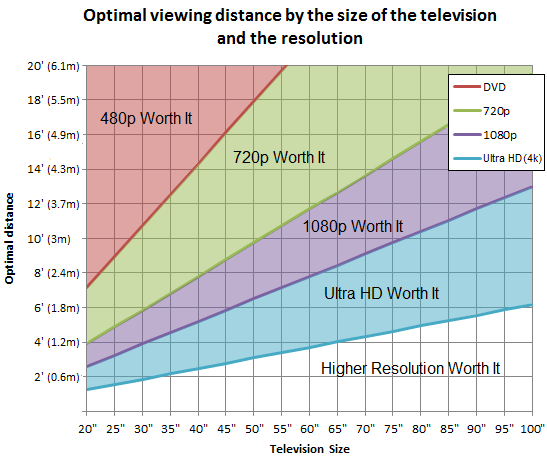

We’ve hit some boundaries of diminishing returns, for example:

- A phone from 5 years ago is still easily powerful enough to run the apps of today. We have to pretend that progress is still happening, by plastering yet another camera lens on the back, and removing yet another micrometer of bezel.

- Resolutions beyond HD are not nearly as noticeable of an upgrade. It often feels like we’re just doing 2K and 4K resolutions, because bigger number = better.

- Games went from looking hyperrealistic to looking hyperrealistic with a few more shrubs in the background.

Many markets are now saturated. Most people have a phone, they don’t need a second one. Heck, the youngest generation often only has a phone, and no PC/laptop. As a result, investors are less willing to bring in money.

I feel like that’s why the IT industry is so horny for market changes, like VR, blockchain, COVID, LLMs etc… As soon as a new opportunity arises, there’s potential for an unsaturated market. What if everyone rushes to buy a new “AI PC”, whatever the fuck that even means…?Well, and finally, because everyone and their mum now spends a large chunk of their lives online, this isn’t the World Wide West anymore. Suddenly, you’ve got to fulfill regulations, like the GDPR, and you have to be equipped against security attacks. Well, unless you find one of those new markets, of course, then you can rob everyone blind of their copyright and later claim you didn’t think regulations would apply.

I dunno, did they have those alarm clocks you have to chase around 10 years ago?

Maybe? I remember seeing an alarm on Think Geek back in the late 00s that would fire off a helicopter that you’d have to bring back to the base station to turn it off. I was debating between that and the ninja boom alarm clock (which I think had a disk to vibrate the whole damned bed) for a good while.

TV resolution peaked about 10 years ago with 1080p. The improvement to 4K and high dynamic range is minor.

3D gaming has plateaued as well. While it may be possible to make better graphics, those graphics don’t make better games.

Computers haven’t improved substantially in that time. The biggest improvement is maybe usb-c?

Solar energy and battery storage have drastically changed in the last 10 years. We are at the infancy of off grid building, micro grid communities, and more. Starlink is pretty life changing for rural dwellers. Hopefully combined with the van life movement there will be more interesting ways to live in the future, besides cities, suburbs, or rural. Covid telework normalization was a big and sudden shift, with lasting impacts.

Maybe the next 10 years will bring cellular data by satellite, and drone deliveries?

3D gaming has plateaued as well. While it may be possible to make better graphics, those graphics don’t make better games.

I haven’t played it, only seen clips, but have you seen AstroBoy? It’s true that the graphics aren’t really too much better than the PS4, but there’s like a jillion physics objects on the screen with 60fps. It’s amazing. Graphics are still improving, just in different ways.

Strong disagree about the 4k thing. Finally upgraded my aging 13 year old panels for a fancy new Asus 4k 27"and yeah it’s dramatically better. Especially doing either architectural or photographic work on it. Smaller screens you’ve got a point though. 4k on a 5" phone seems excessive.

I mean for television or movies. From across the room 4k is only slightly sharper than 1080p. Up close on a monitor is a different story.

It’s significantly better if you’re actually in the optimal range. Rest of article for image. HDR is fantastic on a OLED. Some cheap sets advertise HDR but it’s crap. I’ll also mention 4K from a disc is massively better than any streaming service I’ve come across. Netflix caps 4K streaming at 25 mbps and most of my disc are like 75-90mbps.

Sorry to make you feel old but 10 years ago 4k was already mainstream, and you would have already had difficulty finding a good new 1080p TV. That is roughly the start of proper HDR being introduced to the very high end models.

Also, maybe you’ve only experienced bad versions of these technologies because they can be very impressive. HDR especially is plastered on everything but is kinda pointless without hardware to support proper local dimming, which is still relegated to high end TVs even today. 4k can feel very noticeable depending on how far you sit from the TV, how large the screen is, and how good one’s eyesight is. But yeah, smaller TVs don’t benefit much. I only ended up noticing the difference after moving and having a different living room setup, siting much closer to the TV.

I wouldn’t call 4K mainstream in 2014 - I feel like it was still high end.

I didn’t have a 4K TV until early 2019 or so when unfortunately, the 1080p Samsung one got damaged during a move. Quite sad - it had very good color despite not having the newest tech, and we’d gotten it second-hand for free. Best of all, it was still a “dumb” TV.

Of course, my definition of mainstream is warped, as we were a bit behind the times - the living room had a CRT until 2012, and I’m almost positive all of the bedroom ones were still CRTs in 2014.