- cross-posted to:

- [email protected]

The increasing use of AI is horrifying. Stop playing Frankenstein! Quit creating thinking beings and using them as slaves.

Hilarious and true.

last week some new up and coming coder was showing me their tons and tons of sites made with the help of chatGPT. They all look great on the front end. So I tried to use one. Error. Tried to use another. Error. Mentioned the errors and they brushed it off. I am 99% sure they do not have the coding experience to fix the errors. I politely disconnected from them at that point.

What’s worse is when a noncoder asks me, a coder, to look over and fix their ai generated code. My response is “no, but if you set aside an hour I will teach you how HTML works so you can fix it yourself.” Never has one of these kids asking ai to code things accepted which, to me, means they aren’t worth my time. Don’t let them use you like that. You aren’t another tool they can combine with ai to generate things correctly without having to learn things themselves.

100% this. I’ve gotten to where when people try and rope me into their new million dollar app idea I tell them that there are fantastic resources online to teach yourself to do everything they need. I offer to help them find those resources and even help when they get stuck. I’ve probably done this dozens of times by now. No bites yet. All those millions wasted…

Coder? You havent been to university right?

I’ve been a professional full stack dev for 15 years and dabbled for years before that - I can absolutely code and know what I’m doing (and have used cursor and just deleted most of what it made for me when I let it run)

But my frontends have never looked better.

Ha, you fools still pay for doors and locks? My house is now 100% done with fake locks and doors, they are so much lighter and easier to install.

Wait! why am I always getting robbed lately, it can not be my fake locks and doors! It has to be weirdos online following what I do.

The difference is locks on doors truly are just security theatre in most cases.

Unless you’re the BiLock and it takes the LockPickingLawyer 3 minutes to pick it open.

To be fair, it’s both.

This is satire / trolling for sure.

LLMs aren’t really at the point where they can spit out an entire program, including handling deployment, environments, etc. without human intervention.

If this person is ‘not technical’ they wouldn’t have been able to successfully deploy and interconnect all of the pieces needed.

The AI may have been able to spit out snippets, and those snippets may be very useful, but where it stands, it’s just not going to be able to, with no human supervision/overrides, write the software, stand up the DB, and deploy all of the services needed. With human guidance sure, but with out someone holding the AIs hand it just won’t happen (remember this person is ‘not technical’)

idk ive seen some crazy complicated stuff woven together by people who cant code. I’ve got a friend who has no job and is trying to make a living off coding while, for 15+ years being totally unable to learn coding. Some of the things they make are surprisingly complex. Tho also, and the person mentioned here may do similarly, they don’t ONLY use ai. They use Github alot too. They make nearly nothing themself, but go thru github and basically combine large chunks of code others have made with ai generated code. Somehow they do it well enough to have done things with servers, cryptocurrency, etc… all the while not knowing any coding language.

That reminds me of this comic strip…

It’s further than you think, it’s able to produce basic SaaS apps now.

Did it provision a scalable infrastructure? Because that’s the aaS part of SaaS.

Might be satire, but I think some “products based on LLMs” (not LLMs alone) would be able to. There’s pretty impressive demos out there, but honestly haven’t tried them myself.

Claude code can make something that works, but it’s kinda over engineered and really struggles to make an elegant solution that maximises code reuse - it’s the opposite of DRY.

I’m doing a personal project at the moment and used it for a few days, made good progress but it got to the point where it was just a spaghetti mess of jumbled code, and I deleted it and went back to implementing each component one at a time and then wiring them together manually.

My current workflow is basically never let them work on more than one file at a time, and build the app one component at a time, starting at the ground level and then working in, so for example:

Create base classes that things will extend, Then create an example data model class, iterate on that architecture A LOT until it’s really elegant.

Then Ive been getting it to write me a generator - not the actual code for models,

Then (level 3) we start with be UI.layer, so now we make a UI kit the app will use and reuse for different components

Then we make a UI component that will be used in a screen. I’m using flutter as an example so It would be a stateless component

We now write tests for the component

Now we do a screen, and I import each of the components.

It’s still very manual, but it’s getting better. You are still going to need a human cider, I think forever, but there are two big problems that aren’t being addressed because people are just putting their head in the sand and saying nah can’t do it, or the clown op in the post who thinks they can do it.

-

Because dogs be clownin, the public perception of programming as a career will be devalued “I’ll just make it myself!” Or like my rich engineer uncle said to me when I was doing websites professionally - a 13 year old can just make a website, why would I pay you so much to do it. THAT FUCKING SUCKS. But a similar attitude has existed from people “I’ll just hire Indians”. This is bullshit, but perception is important and it’s going to require you to justify yourself for a lot more work.

-

And this is the flip side good news. These skills you have developed - it’s is going to be SO MUCH FUCKING HARDER TO LEARN THEM. When you can just say “hey generate me an app that manages customers and follow ups” and something gets spat out, you aren’t going to investigate the grind required to work out basic shit. People will simply not get to the same level they are now.

That logic about how to scaffold and architect an app in a sensible way - USING AI TOOLS - is actually the new skillset. You need to know how to build the app, and then how to efficiently and effectively use the new tools to actually construct it. Then you need to be able to do code review for each change.

</rant>

-

Mmmmmm no, Claude definitely is. You have to know what to ask it, but I generated and entire deadman’s switch daemon written in go in like an hour with it, to see if I could.

So you did one simple program.

SaaS involves a suite of tooling and software, not just a program that you build locally.

You need at a minimum, database deployments (with scaling and redundancy) and cloud software deployments (with scaling and redundancy)

SaaS is a full stack product, not a widget you run on your local machine. You would need to deputize the AI to log into your AWS (sorry, it would need to create your AWS account) and fully provision your cloud infrastructure.

Lol they don’t need scaling and redundancy to work. They just need scaling and redundancy to avoid being sued into oblivion when they lose all their customer data.

As a full time AI hater, I fully believe that some code-specialized AI can write and maybe even deploy a full stack program, with basic input forms and CRUD, which is all you need to be a “saas”.

It’s gonna suck, and be unmaintainable, and insecure, and fragile. But I bet it could do it and it’d work for a little while.

That’s not “working saas” tho.

Its like calling hello world a “production ready CLI application”.

What makes it “working”, is that the Software part of Software as a Service, is available as a Service.

The service doesn’t have to scale to a million users. It’s still a SaaS if it has one customer with like 4 users.

Is this a pedantic argument? Yes.

Are you starting a pedantic fight about the specific definition of SaaS? Also yes.My CGI script is a SaaS.

deleted by creator

I’m skeptical. You are saying that your team has no hand in the provisioning and you deputized an AI with AWS keys and just let it run wild?

How? We try to adopt AI for dev work for years now and every time the next gen tool or model gets released it fails spectacularly at basic things. And that’s just the technical stuff, I still have no idea on how to tell it do implement our use cases as it simply does not understand the domain.

It is great at building things other have already built and it could train on but we don’t really have a use case for that.

Ok, but what did they try to do as a SaaS?

Money.

“Come try my software! I’m an idiot, so I didn’t write it and have no idea how it works, but you can pay for it.”

to

“🎵How could this happen to meeeeee🎵”

But I thought vibe coding was good actually 😂

Vibe coding is a hilarious term for this too. As if it’s not just letting AI write your code.

hahahahahahahahahahahaha

Two days later…

Is the implication that he made a super insecure program and left the token for his AI thing in the code as well? Or is he actually being hacked because others are coping?

Nobody knows. Literally nobody, including him, because he doesn’t understand the code!

Nah the people doing the pro bono pen testing know. At least for the frontend side and maybe some of the backend.

But the things doing the testing could be bots instead of human actors, so it may very well be that no human does in fact know.

Thought so too, but nah. Unless that bot is very intelligent and can read and humorously respond to social media posts by settings its fake domain.

Good point! Thanks for pointing that out.

I’m stealing “pro bono pen testing.”

Cant steal it, if it is already pro bono :D

That’s fucking hilarious then.

rofl!

He told them which AI he used to make the entire codebase. I’d bet it’s way easier to RE the “make a full SaaS suite” prompt than it is to RE the code itself once it’s compiled.

Someone probably poked around with the AI until they found a way to abuse his SaaS

Doesn’t really matter. The important bit is he has no idea either. (It’s likely the former and he’s blaming the weirdos trying to get in)

Potentially both, but you don’t really have to ask to be hacked. Just put something into the public internet and automated scanning tools will start checking your service for popular vulnerabilities.

deleted by creator

Not just, but he literally advertised himself as not being technical. That seems to be just asking for an open season.

Devils advocate, not my actual opinion; if you can make a Thing that people will pay to use, easily and without domain specific knowledge, why would you not? It may hit issues at some point but by them you’ve already got ARR and might be able to sell it.

If you started from first principles and made a car or, in this case, told an flailing intelligence precursor to make a car, how long would it take for it to create ABS? Seatbelts? Airbags? Reinforced fuel tanks? Firewalls? Collision avoidance? OBD ports? Handsfree kits? Side impact bars? Cupholders? Those are things created as a result of problems that Karl Benz couldn’t have conceived of, let alone solve.

Experts don’t just have skills, they have experience. The more esoteric the challenge, the more important that experience is. Without that experience you’ll very quickly find your product fails due to long-solved problems leaving you - and your customers - in the position of being exposed dangers that a reasonable person would conclude shouldn’t exist.

Yeh, arguably and to a limited extent, the problems he’s having now aren’t the result of the decision to use AI to make his product so much as the decision to tell people about that and people deliberately attempting to sabotage it. I’m careful to qualify that though because the self evident flaw in his plan even if it only surfaced in a rather extreme scenario, is that they lack the domain specific knowledge to actually make his product work as soon as anything becomes more complicated than just collecting the money. Evidently there was more to this venture than just the building of the software that was necessary to for it to be a viable service. Much like if you consider yourself the ideas man and paid a programmer to engineer the product for you and then fired them straight after without hiring anyone to maintain it or keep the infrastructure going or provide support for your clients and then claimed you ‘built’ the product, you’d be in a similar scenario not long after your first paying customer finds out the hard way that you don’t actually know anything about your own service that you willingly took money for and can’t actually provide service part of the Software as a Service

If I were

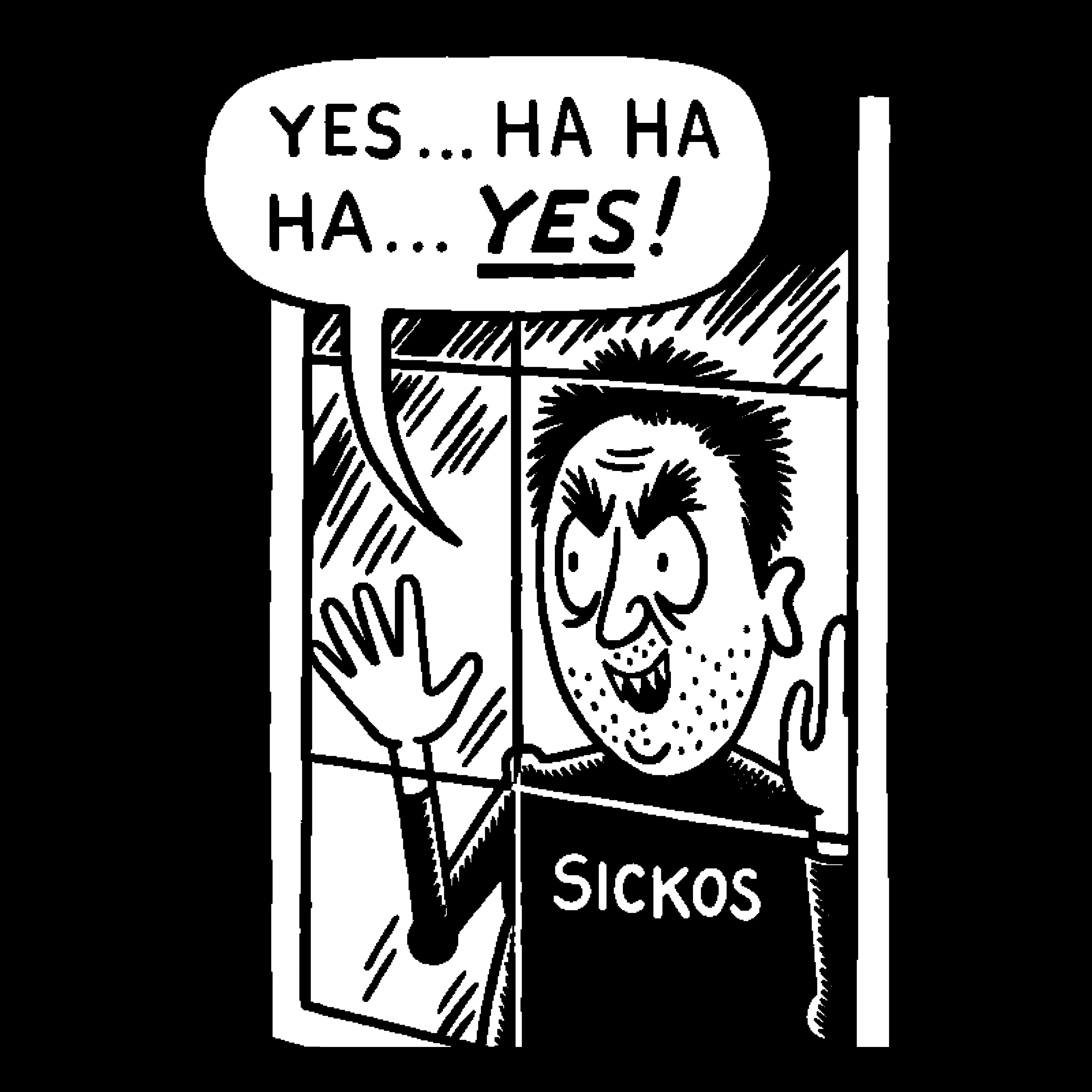

leojr94, I’d be mad as hell about this impersonator doling the good name ofleojr94—most users probably don’t even notice the underscore.Managers hoping genAI will cause the skill requirements (and paycheck demand) of developers to plummet:

Also managers when their workforce are filled with buffoons:

AI is yet another technology that enables morons to think they can cut out the middleman of programming staff, only to very quickly realise that we’re more than just monkeys with typewriters.

We’re monkeys with COMPUTERS!!!

Yeah! I have two typewriters!

i have a mobile touchscreen typewriter, but it isn’t very effective at writing code.

I was going to post a note about typewriters, allegedly from Tom Hanks, which I saw years and years ago; but I can’t find it.

Turns out there’s a lot of Tom Hanks typewriter content out there.

He donated his to my hs randomly, it was supposed to goto the valedictorian but the school kept it lmao, it was so funny because they showed everyone a video where he says not to keep the typewriter and its for a student

That’s … Pretty depressing.

To be fair… If this guy would have hired a dev team, the same thing could happen.

True, any software can be vulnerable to attack.

but the difference is a technical team of software developers can mitigate an attack and patch it. This guy has no tech support than the AI that sold him the faulty code that likely assumed he did the proper hardening of his environment (which he did not).

Openly admitting you programmed anything with AI only is admitting you haven’t done the basic steps to protecting yourself or your customers.

But then they’d have a dev team who wrote the code and therefore knows how it works.

In this case, the hackers might understand the code better than the “author” because they’ve been working in it longer.

Was listening to my go-to podcast during morning walkies with my dog. They brought up an example where some couple was using ShatGPT as a couple’s therapist, and what a great idea that was. Talking about how one of the podcasters has more of a friend like relationship to “their” GPT.

I usually find this podcast quite entertaining, but this just got me depressed.

ChatGPT is by the same company that stole Scarlett Johansson’s voice. The same vein of companies that thinks it’s perfectly okay to pirate 81 terabytes of books, despite definitely being able to afford paying the authors. I don’t see a reality where it’s ethical or indicative of good judgement to trust a product from any of these companies with information.

I agree with you, but I do wish a lot of conservatives used chatGPT or other AI’s more. It, at the very least, will tell them all the batshit stuff they believe is wrong and clear up a lot of the blatant misinformation. With time, will more batshit AI’s be released to reinforce their current ideas? Yea. But ChatGPT is trained on enough (granted, stolen) data that it isn’t prone to retelling the conspiracy theories. Sure, it will lie to you and make shit up when you get into niche technical subjects, or ask it to do basic counting, but it certainly wouldn’t say Ukraine started the war.

It will even agree that AIs shouldn’t controlled by oligarchic tech monopolies and should instead be distributed freely and fairly for the public good, but the international system of nation states competing against each other militarily and economically prevents this. But maybe it will agree to the opposite of that too, I didn’t try asking.