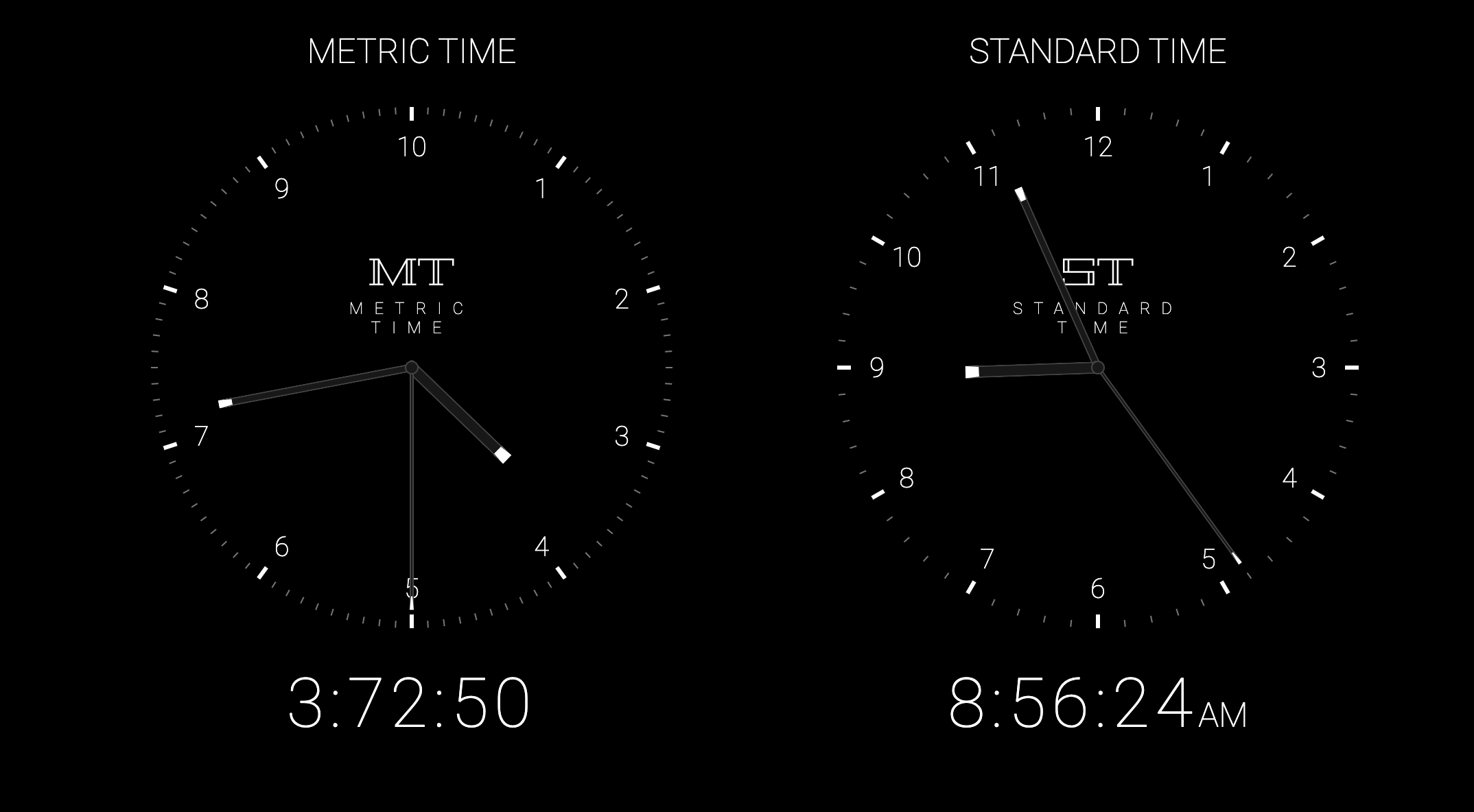

Now I finally know how it feels to be a real American

For once I hate metric (time)

The advantage of 12 and 60 is that they’re extremely easy to divide into smaller sections. 12 can be divided into halves, thirds, and fourths easily. 60 can be divided into halves, thirds, fourths, and fifths. So ya, 10 isn’t a great unit for time.

My vote is power-of-two based. Everything should be binary. It is divided up so much easier and counting is better.

I don’t understand how its any easier than using 100 and dividing…

1/2 an hour is 30 min 1/2 an hour if metric used 100 is 50 min

1/4 an hour is 15 min 1/4 an hour metric is 25 min

Any lower than that and they both get tricky…

1/8 an hour is 7.5 min 1/8 an hour metric is 12.5 min

Getting used to metric time would be an impossible thing to implement worldwide I reckon, but I struggle to understand how its any less simple than the 60 min hour we have and the 24 hour day…

And 1/3 of 100 is 33.3333333333333. There are strong arguments for a base 12 number system (https://en.wikipedia.org/wiki/Duodecimal), and some folks have already put together a base 12 metric system for it. 10 is really quite arbitrary if you think about it. I mean we only use it because humans have 10 fingers, and it’s only divisible by 5 and 2.

That said, the best argument for sticking with base 10 metric is that it’s well established. And base 10 time would make things more consistent, even if it has some trade offs.

I will always up vote base12 superiority posts

Ancient central Americans used a base 12 number system and counted on their fingers using finger segments (3 per finger, 4 fingers, 12 segments). Makes fractions way more intuitive

The clock should go from 0.0 to 1.0. Dinner time would be around 0.7083 o-clock.

Dinner time would be around 0.7083 o-clock.

This is fairly similar to .beat time; in that system you would write it as @708. I guess you could make it @708.3 to be more specific.

I loved the idea behind Swatch’s .beats. A “beat” was slightly short of 1.5 minutes, so totally usable in everyday life. If you need more precision, decimals - as @[email protected] suggested - are allowed.

However, one big issue of it is that it is based on Biel, Switzerland local time and the same for everyone around the world. Might not be that big of a problem for Europeans, but while e.g.

@000is midnight in Biel, it’s early morning in Australia, and afternoon/evening in the US.And the second, bigger issue becomes obvious when you start looking at the days. E.g. people in the US would start work

@708on a Tuesday and finish@042on Wednesday. Good luck scheduling your meetings like this.

Neat but holy good fucking god the amount of programming it would take if it was ever decided to change this going forward, not to mention how historical times would be referenced. Datetime programming is already such a nightmare.

I sit in a cubicle and I update bank software for the

2000metric switch.

Lol. Seriously though, for something like this these days, it will be interesting to see what happens given we will have to face the year 2038 problem. This kind of thing was still doable for the 2000 switch because of the relatively small number of devices/softwares, but because of the number of devices and softwares now, let alone in 2038, I really have no idea how it’s going to be managed.

Although it is only software/devices that are 32-bit

I think you meant to link decimal time per Wikipedia?

they are slightly different

No. But they are so similar, I’d be happy to adopt either. In fact, decimal time was actually used briefly.

Here is the link you wanted:

I feel like I’m going crazy at moments like this.

Wikipedia on Metric Time:

The modern SI system defines the second as the base unit of time, and forms multiples and submultiples with metric prefixes such as kiloseconds and milliseconds.

Edit: Attention that this is the SI second, not a decimal second

Wikipedia on Decimal Time:

This term is often used specifically to refer to the French Republican calendar time system used in France from 1794 to 1800, during the French Revolution, which divided the day into 10 decimal hours, each decimal hour into 100 decimal minutes and each decimal minute into 100 decimal seconds

With metric time the day is broken into 10 hours.

A metric hour is broken into 100 minutes.

A metric minute is broken into 100 seconds.So either Wikipedia is wrong or the website.

Then the wikipedia is wrong? Because what the website you linked calls metric time Wikipedia calls decimal

I could get used to that, it’s actually a great idea. Though probably impossible to implement due to inertia, at least currently.

The proof that it is probably not possible ever is that metric time was already adopted during the French Revolution, during the period when they were metricising everything else, and even they decided that it wasn’t worthwhile.

Yeah but don’t make the units “metric hours/minutes/seconds” for crying out loud. Make another unit of measurement.

US miles, or Scottish, or Swedish, or what? Reusing a word for a different meaning is never the solution.

Not bad, but I prefer Swatch Internet Time

Me too. If the standards wouldn’t be held by a private company.

This feels like an April fools joke.

Ridiculous concept. If you can’t do the math, get an app or ask an adult.

As someone who has written a ridiculous amount of code that deals with date and time, I support this 100%.

Great! Now you’ll not only need to convert between timezones but also between metric and standard time.

Also the respective intervals adjusted by leap days and leap seconds will be different!

Why settle for 24 when you can have a hundred fractions?

Maybe I’m not understanding this right. A quick google search shows that there is 86 400 seconds in a day. With metric time, an hour is 10 000 seconds. That means that a day would be 8.6 hours, but on this clock it’s 10? How does that work?

One metric second != one (conventional) second

So it’s not using the SI second? That’s a bit weird

I guess it could make sense. Reading a bit more and it looks like the second is defined as a fraction (1/86400) of a day. Using 1/100000 wouldn’t be tgat crazy. But more than just fucking up all our softwares and time-measuring tools, that would also completely change a lot of physics/chemistry formulas (or constants in these formulas ti be more precise). Interesting thought experiment, but i feel that particularly changing the definition of a second would affect soooo mucchhh.

A friend once mused that “wearing a watch is like being handcuffed to time.” It’s one of the reasons I stopped being a watch guy (but more because I checked it compulsively but didn’t know what time it was when a coworker saw me check and ask the time).

Anyway, I prefer to try not to keep track of the time unless I need to be somewhere.

If you want to go the middle way, you could consider a one-hand watch.

Thank you, crossposted to [email protected]

Pair it with International Fixed Calendar and I’m on board.

I noticed this my senior year of high school (1991) So much easier. Every month has Friday the 13th too! Lol

The new year should start with spring though, not in the middle of winter.

While were at it, the day should start at sunrise not the middle of the night.

I Used to joke about this with my Canadian coworkers. Didn’t know it was an actual thing?