Hi comrades. My username is soviet entropy and the entropy part is not doomerism or a momento mori. Entropy is imo a vital part of modern materialist analysis. This post is an introduction to the concept and how it relates to political economy.

1. Information is Physical

“Information” is often considered to be immaterial. Many definitions of it are and many definitions of it are also bad an incoherent. As marxists and materialists, what is information?

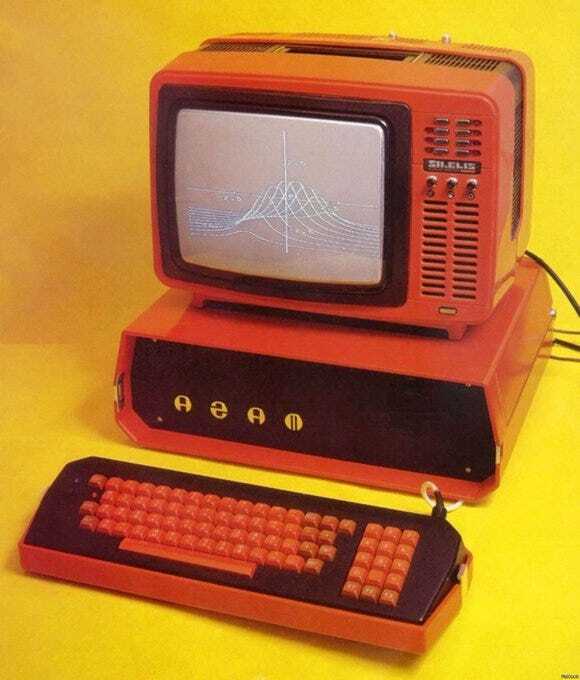

Let’s take a sequence. It could be a sequence of atoms or molecules or DNA base pairs. I’m going to have it be numbers for this demonstration. Here is the sequence: []

How much information does this sequence contain? Well let’s compare it to another sequece: []

Now image want to perfectly re-create these sequences somewhere else. We need to store them first. If we wanted to store these sequences by compressing them, it would be easier to store the second one than the first one. We can compress the first by saying “repeat 0 four times, then 1 four times”. To compress the second, we only need to say “repeat 0 eight times”. The less you can compress a sequence, the more information it has. This is sometimes called Shannon Information Theory.

Imagine we have a completely random sequence. Well if there is no pattern to it, then the smallest way we can store it is simply writing the entire thing down. There are no patterns or anything. Sure it’s not information that is useful to humans, but it’s information.

But what does this have to do with Entropy or Materialism?

2. Entropy

Entropy is a simple concept that physicists have made difficult to understand. I think this is because they are often idealist or dualist and not materialist.

Imagine we have a six sided die that is perfectly fair. The numbers 1 thru 6 have a perfectly equal chance of coming up. We’re gonna roll it a bunch of times.

Now, how surprised would we be if a 2 comes up? Not very surprised. If we roll it again and a 3 comes up, again we are not surprised. If we roll it 4 more times and only get 2 and 3, then we are going to be more surprised. We can say that our surprise is inversely related to the probability of something happening. And indeed this is a concept in statistics called “surprise”. If something has a 1/10 chance of happening,we would be less surprised of it happening than something with a 1/100 chance. We define surprise as the logarithm of the inverted probability. So for the 1/10 and 1/100 things, they have a surprise of log(10) and log(100). We only do this log thing to make something with a chance of 100% have 0 surprise. Otherwise it would have a surprise of 1.

Now image we knew the chances of events happening. Say we know that a die is fair or we observed a process for a long time and now the typical things it does. We could have an expectation for our surprise. The surprise of each event that could happen weighted by how likely it is to happen. All of this added up is our total expected surprise. This is what Entropy is.

Let’s go back to the sequences. Remember, these could be numbers or atoms of a metallic crystal or DNA base pairs. Let’s say we have the sequence: []

The probability of getting a 0 is 3/8 (we count the number of 0’s).

So the surprise is log(8/3).

The probability of getting a 1 is 5/8 so the surprise is log(5/8).

The Entropy of this sequence is therefore: 3/8 * log(8/3) + 5/8 * log(8/5) = 0.66

If we do it for this sequnce: []

We get 8/8 * log(8/8) = 0

Take a minute here if you want. Something might be about to click! Sequences with low information, like 0 repeated eight times, also have low Entropy! That’s because Entropy is Information. They are the same thing. The amount of entropy in a sequence is how much information it contains and also how difficult it would be to compress it.

3. Increasing Entropy and Production

So why does Entropy increase?

This is not from some hand of God or a mystical force. Here’s why it happens.

Imagine a sequence of random numbers that you get from rolling a six sided die. Maybe we get [].

Now, of all the possible sequences we can have for numbers rolled from a die, how many of them have that many 1’s? Not that many. If something causes some of those numbers to be re-rolled, there are way more outcomes where there are fewer 1s. In fact, the most likely outcome would be one where the sequence gets more random. There are way more sequences of 8 numbers from a die where there are a relatively equal amount of each number. And these sequences also have higher Entropy. So with random change over time, Entropy tends to increase.

But how to we remove Entropy? Well that takes energy. And it’s what production is.

Let’s say we have a sequence of aluminum atoms in a big sheet of aluminum. This is a very, very low entropy/low information material. To make it useful, we in fact want to put out own information into it. We use a big press to stamp a pattern into the alluminum and turn it into a car body. But in order to do this, we first needed to remove all the entropy that acrued over time from the random movements of matter and energy in the earth’s crust. Aluminum ore has tons of entropy because of all the other atoms bonded to the aluminum and the other rocks and things in it. There are way more ways for 1kg of aluminum atoms to be in a hunk or ore than in a sheet of metal. So we first take out information and then put our own information in. We use energy to decrease the entropy and then increase it again toward what we want.

The same goes for printing a book, or for making a chair, or printing semiconductor chips, or building a cargo ship.

Finale

I hope you all found this interesting. Materialism is not just a belief that the universe is governed by rules or anything like that. Materialism means that the physical world of particles and energy are the only things that are real. Information is often thought of to be a human construct but it is not. It is a real, physical, material thing.

The relevance to modern political economy and marxist analysis can be gone over in more detail in anyone wants. One of the most important results is that in a situation where production and distribution are mediated by money and markets between equals, the maximum entropy situation is the same as the distribution of energy in a chamber of gas molecules. Meaning that over time there will be a large mass of very poor people and a small mass of ever wealthier and ever smaller people.

Nice to see some solid materialist philosophy be presented here. I would say that the most important political conclusion from this analysis is that market socialism is on the long-term not viable. It will reproduce massive inequalities no matter what (modern China being a great example of this).

Also, in a post-monetary economy, entropy flows can become a proxy of measuring economic growth, although to be honest, measuring the entropy changes of industrial production accurately seems difficult right now.

And don’t forget that even without money, we will still be able to measure embodied labor content. Even if the social arrangements don’t let embodied labor content be what determines distribution, it will still be measurable. Altho one would have to fix it relative to a specific year otherwise the growth in embodied labor will just be the size of the proletariat times the average hours worked.

Thing about embodied labor content is that it is easy to measure (bourgeois statisticians already measure it), but all it tells you is the size of the working population. It is not a measure of the total output because different economies have different productivity especially.

I just remembered, Marx conceived of value/exchange value as economic analogues of heat, labor-power as the economic analogue of power, and price as the analogue of temperature.

Expanding on this scheme, we might think of a market of commodities as a mixture of ideal gases (different products), whose entropy is then proportional to the logarithm of the number of microstates of the mixture. Interestingly, mixing 2 gases (at the same temperature and pressure) increases their entropy according to the following formula

Change in entropy = n*(x1ln(1/x1) + x2ln(1/x2))

Where n is the total number of moles of the gas, and x1 is the fraction of the total moles belonging to gas 1 and so on. ln(x) is the natural logarithm (logarithm with euler’s constant as base). Basically, mixing 2 gases increases their entropy and is preferable to the gases staying seperate. The formula also predicts that the entropy change is maximized when there is an equal number of moles of each gas.

Converting this into its economic analogue, whatever the economic version of entropy is, it is increased when you add more types of commodities into the market, and it increases most when there is a balance between the types of commodities.

I can’t say for sure whether or not an economic version of the second law of thermodynamics applies to this system, but if it does, it would imply that capitalist markets continuously grow in variety of commodities over time, and there is a preference against 1 type of commodity becoming the dominant commodity sold in a market.

The political implications of this could actually be quite important, as it suggests free market economy (with actually free markets, not ruled by oligarchs thumbing the scales) tends towards equal distribution of production amongst sectors. There is other work by some Marxists like Paul Cockshott which says that a “balanced economy” is virtually guaranteed to collapse, because a developed industrialized economy requires a large heavy industry sector to sustain itself.

All of these predictions seem to be consistent with observation today. The developed countries of the world are only standing due to the existence of countries like China which thumb the scale and maintain huge heavy industry sectors. The western economies which liberalized moved towards balanced economies and thus lost their ability to be self sufficient.

I think you would very much enjoy the book “Classical Econophysics”!